Data comes from a vast majority of different sources.

#Phone analyzer elasticsearch free#

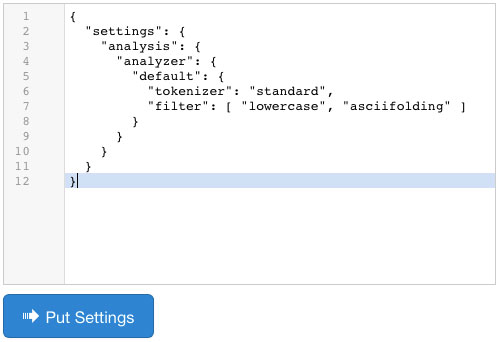

It excels in free text searches and is designed for horizontal scalability. A Short Introduction to ElasticSearchĮlasticSearch is an open source, distributed, JSON-based search and analytics engine which provides fast and reliable search results. In order to do so, the ssdeep data needs to be stored in a database. Using these two optimization rules, it is possible to drastically improve performance when trying to find similarities using ssdeep. Only examining items that have a common seven-character substring in their chunk or double_chunk with the ssdeep to compare. Only examining items that have chunksize equal, double or half of the chunksize of the ssdeep to compare ( chunksize * 2 or chunksize / 2)Ģ. The article describes optimizations that can be done to improve performance on finding similarities using ssdeep, including:ġ.

Brian Wallace’s excellent article in Virus Bulletin describes a way to improve the usage of ssdeep for finding similarities at scale. Unfortunately, running the ssdeep compare function on a very large amount of files and memory items is not scalable at all. The ssdeep library has a “ compare” function used for comparing 2 ssdeep strings, grading their similarity – a number between 0 to 100. This is an example for a typical ssdeep hash:ħ68:v7XINhXznVJ8CC1rBXdo0zekXUd3CdPJxB7mNmDZkUKMKZQbFTiKKAZTy:ShT8C+fuioHq1KEFoAU The double_chunk is computed over the same data as chunk, but computed with chunksize * 2. Each character of the chunk represents a part of the original file of length chunksize. The chunksize is an integer that describes the size of the chunks in the following parts of the ssdeep hash. If only a few bytes of the file changes, it will only slightly change the hash value. The results of that hash construct the final hash result. The ssdeep hash algorithm splits the file into chunks, and run a hash function on each one of them.

Using these hash algorithms, it’s possible to connect memory items to each other, treating them as a group–enabling us to collect more comprehensive intelligence about them in the process. Examples of these hash algorithms include sdhash and ssdeep. These hash algorithms consider the structure of data, so similar items will receive similar hash results. When dealing with items from memory, a powerful alternative for standard hashing is locality-sensitive hashing. Locality-Sensitive Hashing (Fuzzy Hashing) As a result, it is impossible to identify memory items using a standard hash function like sha256 or md5. Therefore, using conventional hash-based threat intelligence services (such as VirusTotal) or file reputation databases in order to investigate these items becomes virtually ineffective, since using hash functions on these memory items produces unique results for each memory item.

#Phone analyzer elasticsearch code#

As code is loaded to memory from a file, it differs somewhat from its original structure.

When doing so, we extract different memory items that need to be investigated. Why Standard Hash Functions Aren’t Helpful In MemoryĪt Intezer, we specialize in analyzing code from memory to deal with injections, process hollowing, and other memory attacks.

0 kommentar(er)

0 kommentar(er)